Klein

What's your position on user signals as a ranking factor in 2021?

I think that with Chrome User Experience (UX) reports, user signals are becoming increasingly important. But i still doubt that as a ranking factor they are clear enough to outweigh the relevance of Search Engine Optimization (SEO) copy or backlinks.

I'm hesitant to cut away on SEO footer copy or even (reasonable) ATF SEO copy to improve UX. I always see the risk of losing synonyms and long tails, even more so with non-english content, where I don't believe semantic recognition capabilities of search engines to be as effective.

📰👈

22 💬🗨

User signals is a very vague thing. I've seen it mentioned in a couple of patents, but never really as a patent that focused on that exclusively. These mentions are where, I assume, much of the mythology that Click Through Rate (CTR) and Bounce Rate affect rankings – but that's not at all true and it's not really what they are talking about.

The patents talk about analyzing how users interact with the search results themselves. It would be situations like – they click a link or two and come back, but then end up refining or changing their search in some way. And THEN maybe they see what they want. This can, in volume, give Google an idea of what people are actually hoping to find when typing in a specific search term.

Bounce rate and Click Through Rate (CTR) on their own are not reliable signals at all.

If I ask, What is Biden's Birthday?… then the answer is usually up there from a specific source, so no click is needed. Or, the answer might appear in the meta description of one of the page. So… as far as Google is concerned – a zero CTR in that case is a good result, not a bad one.

If it's not visible, that same question could drive a click, but then send me to a page that gives me the answer. So I back out and leave. That's a "bounce" – but it's also a satisfactory result. This is why bounce rates aren't a reliable signal.

Seeing how they interact with the results IS useful, though.

If they ask Biden's Birthday but then have to end up putting in a new search term – that means that the answers weren't there for that question.

Here's one of the areas where it sometimes fails, though. Imagine a comparative search. Something like "Blue Widgets" (Note the plural). Here I'm indicating that I might want to compare blue widgets, but maybe not. So, if Google gives me a list of sites that have blue widgets and I interact with several of the sites on the list without refining my search – that's a good thing. It knows I want to compare. But, the failure point is that rather than adding more sites to the list, Google tends to decide to offer up comparison sites and affiliate sites that offer many different ones – in spite of the lack of quality and usefulness of many of those. But it's still that interaction with the Search Engine Result Pages (SERPs) themselves that helps Google understand things and adjust the results.

So… yeah – user signals are big. But absolutely NOT in the way most of the industry thinks they are. NOTHING that actually happens once a person leaves a Search Engine Result Page (SERP) gets measured, either. That's not reliable since only Chrome has that, and Chrome users are very different personas than Firefox, Safari, Brave, etc. users. And Chrome only has it if security isn't tamped down hard. (Alexa always had that problem with their Alexa site ranking system – it extrapolated values based upon the people who had the Alexa bar installed, and multiplied that by the number of surfers on the web. The problem was that pretty much the only people who had the Alexa bar turned on were SEO people, so SEO sites, digital marketing sites, and then client sites of digital marketers who had the bar were artificially inflated in value. No Good and it was only a year or two where anyone took that Alexa ranking seriously). So therefore, using Chrome's UX reports as a ranking signal would have those same flaws. It's a decent tool for you to use and help improve the experience, but as something to affect ranking, it's utterly useless.

It's simply "how people interact with the SERPs" – search, and then one click or no clicks but the user moves on – they probably got their answer. Search and then have to refine the search – it is a signal that the first set of results isn't optimal so maybe they need to adjust it or offer refinement options based upon what users did next. THOSE signals are useful (en masse when patterns evolve). Otherwise, the rest of the things people think of when talking about user signals – nope. Doesn't happen.

Great answer my man. I agree. Only thing I'd add is at a certain scale if a site goes viral from non organic traffic, Google notices that via chrome and acts accordingly.

Truslow

I've not seen any patents that suggest that – nor can I imagine a reliable way to measure that… so, while I can't say that's not true… I can't imagine that it could be.

Again… trusting data from Chrome favors a particular audience and too many enterprises in the past have fallen victim to attempting to use that flawed methodology. Alexa (Amazon) was the big one that comes to mind when you think about "learning that the hard way" but many others have done it too. Google, in the early years, even ventured out that way a bit a few times. (Google Directory – basically a mirror of the DMOZ is one that comes to mind. The system was so overburdened and Google was so reliant on it that it became virtually impossible to get listed in any reasonable amount of time which made it impossible to fairly represent the entire web using the directory as the primary classification system. For a while, there were certain categories where it would take upwards of two years to get a site approved and listed in there.)

The one area where Chrome data IS used is in Core Web Vitals – but only as a means to check the correlation between Lab Data and Field Data. The Field Data is what comes from Chrome, but it's not really used to change ranking – just to make ensure that the Lab side (which works whether Chrome is in play or not) is generating numbers that correlate with actual experience numbers. It's a checks and balances system, not really part of the ranking system in and of itself.

Using a limited system that doesn't truly represent the breadth and scope of the potential user base simply isn't a good way to do those types of things. It can be good for quality validation, but is extremely limited and flawed in terms of being able to provide anything useful to say much more than "something may not be right here." It can't say, "Here's the right answer."

Roger » Truslow

The Chrome data is used for the Core Web Vitals (CWV) ranking factor.

The Chrome Field data is what matters. The Lab Data does not matter and is not used by Google.

Field data is not collected by all Chrome browsers, only the ones that have opted into sending feedback.

It's only SOME browsers that contribute to the Chrome User Experience Report aka CrUX. That's why Google will sometimes report not enough data to report CWV scores, because none of the opted in browsers have visited the site enough or at all.

https://developers.google.com/web/tools/chrome-user-experience-reportChrome User Experience Report | Chrome UX Report | Google Developers

Klein » Roger

I find it hard to believe, that the CWV thing is more than an educational measure. I think it's to keep websites from loading content the crawler does not detect and to load fast enough, so that a Google result doesn't provide bad ux (below a certain threshold of loading time). They could have just said, please make sure your website is DOM ready within 2.5 seconds, but instead make a huge effort to keep everybody on their toes and compete.

Truslow » Roger

That's the User Experience Report – NOT the Core Web Vitals (CWV) that is used to affect rankings. They are related, but two different things.

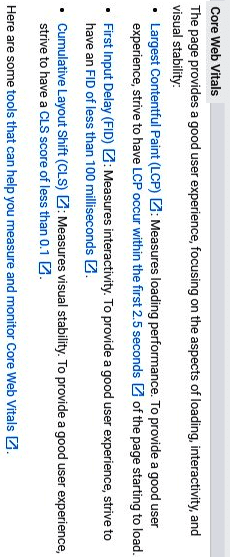

For core web vitals, the only things considered from that report are Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) – at least right now.

https://developers.google.com/search/docs/advanced/experience/page-experienceSo… sorry… yes… the Chrome data is used for the User Experience Report, but that part of the User Experience report is not used for the CWV that affects rankings. As I said, it's useful to make sure that what the other tools are saying is happening is also what's happening in real world scenarios, but it's not something useful enough to be used to actually affect the ranking.

📰👈

📰👈

Roger » Truslow

The data from Chrome is field data. The field data is used for CrUX. The Field Data is used for Core Web Vitals and rankings. CWV is based on Field Data and only on field data. These are facts.

The purpose of Lab Data is for diagnostic purposes by SEO users and publishers. That's all it is for. Lab Data is a simulation of a user on a mobile data connection.

Field Data is actual users. That is what is used for CWV and for Ranking.

Roger » Koszo

There's no evidence that Google uses Chrome to know if a site is going viral. I've not seen anything where it says it uses it for anything other than CWV.

It can deduce popularity via what we call branded navigation and there's a patent about that. where they use that signal to re-rank web pages.

I screwed up and misread the patent when it originally came out around 2013 and focused on one sentence, the part about Implied Links, instead of understanding the entire context of the patent, which was about ranking sites with links and branded search queries, called something like Reference Queries in the patent.

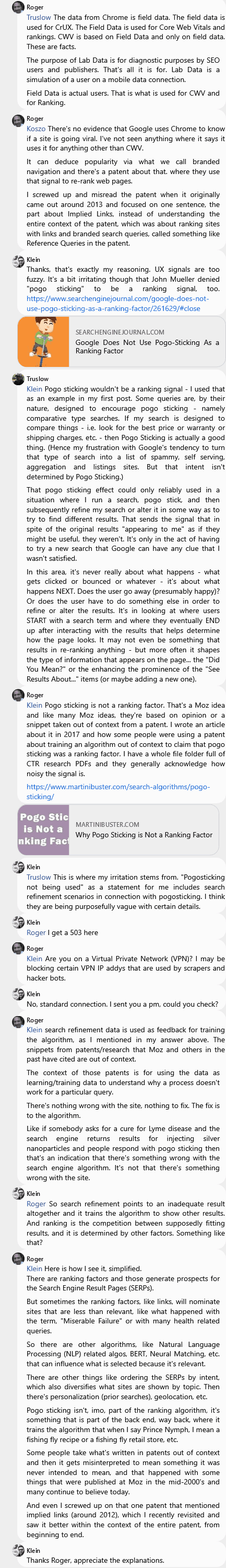

Klein

Thanks, that's exactly my reasoning. UX signals are too fuzzy. It's a bit irritating though that John Mueller denied "pogo sticking" to be a ranking signal, too. https://www.searchenginejournal.com/google-does-not-use-pogo-sticking-as-a-ranking-factor/261629/#close

Google Does Not Use Pogo-Sticking As a Ranking Factor

Truslow » Klein

Pogo sticking wouldn't be a ranking signal – I used that as an example in my first post. Some queries are, by their nature, designed to encourage pogo sticking – namely comparative type searches. If my search is designed to compare things – i.e. look for the best price or warranty or shipping charges, etc. – then Pogo Sticking is actually a good thing. (Hence my frustration with Google's tendency to turn that type of search into a list of spammy, self serving, aggregation and listings sites. But that intent isn't determined by Pogo Sticking.)

That pogo sticking effect could only reliably used in a situation where I run a search, pogo stick, and then subsequently refine my search or alter it in some way as to try to find different results. That sends the signal that in spite of the original results "appearing to me" as if they might be useful, they weren't. It's only in the act of having to try a new search that Google can have any clue that I wasn't satisfied.

In this area, it's never really about what happens – what gets clicked or bounced or whatever – it's about what happens NEXT. Does the user go away (presumably happy)? Or does the user have to do something else in order to refine or alter the results. It's in looking at where users START with a search term and where they eventually END up after interacting with the results that helps determine how the page looks. It may not even be something that results in re-ranking anything – but more often it shapes the type of information that appears on the page… the "Did You Mean?" or the enhancing the prominence of the "See Results About…" items (or maybe adding a new one).

Roger » Klein

Pogo sticking is not a ranking factor. That's a Moz idea and like many Moz ideas, they're based on opinion or a snippet taken out of context from a patent. I wrote an article about it in 2017 and how some people were using a patent about training an algorithm out of context to claim that pogo sticking was a ranking factor. I have a whole file folder full of CTR research PDFs and they generally acknowledge how noisy the signal is.https://www.martinibuster.com/search-algorithms/pogo-sticking/

Why Pogo Sticking is Not a Ranking Factor

Klein » Truslow

This is where my irritation stems from. "Pogosticking not being used" as a statement for me includes search refinement scenarios in connection with pogosticking. I think they are being purposefully vague with certain details.

Klein » Roger

I get a 503 here

Roger » Klein

Are you on a Virtual Private Network (VPN)? I may be blocking certain VPN IP addys that are used by scrapers and hacker bots.

Klein

No, standard connection. I sent you a pm, could you check?

Roger » Klein

Search refinement data is used as feedback for training the algorithm, as I mentioned in my answer above. The snippets from patents/research that Moz and others in the past have cited are out of context.

The context of those patents is for using the data as learning/training data to understand why a process doesn't work for a particular query.

There's nothing wrong with the site, nothing to fix. The fix is to the algorithm.

Like if somebody asks for a cure for Lyme disease and the search engine returns results for injecting silver nanoparticles and people respond with pogo sticking then that's an indication that there's something wrong with the search engine algorithm. It's not that there's something wrong with the site.

Klein » Roger

So search refinement points to an inadequate result altogether and it trains the algorithm to show other results. And ranking is the competition between supposedly fitting results, and it is determined by other factors. Something like that?

Roger » Klein

Here is how I see it, simplified.

There are ranking factors and those generate prospects for the Search Engine Result Pages (SERPs).

But sometimes the ranking factors, like links, will nominate sites that are less than relevant, like what happened with the term, "Miserable Failure" or with many health related queries.

So there are other algorithms, like Natural Language Processing (NLP) related algos, BERT, Neural Matching, etc. that can influence what is selected because it's relevant.

There are other things like ordering the SERPs by intent, which also diversifies what sites are shown by topic. Then there's personalization (prior searches), geolocation, etc.

Pogo sticking isn't, imo, part of the ranking algorithm, it's something that is part of the back end, way back, where it trains the algorithm that when I say Prince Nymph, I mean a fishing fly recipe or a fishing fly retail store, etc.

Some people take what's written in patents out of context and then it gets misinterpreted to mean something it was never intended to mean, and that happened with some things that were published at Moz in the mid-2000's and many continue to believe today.

And even I screwed up on that one patent that mentioned implied links (around 2012), which I recently revisited and saw it better within the context of the entire patent, from beginning to end.

Klein

Thanks Roger, appreciate the explanations.

📰👈

These may satisfy you:

» How Can We Figure Out a Few Of the Hundreds of SEO Ranking Factors Which Drive Mostly Positive Effects?

» Conspiracy Theory in SEO: First Page Results May Change Your Mind or Knowledge