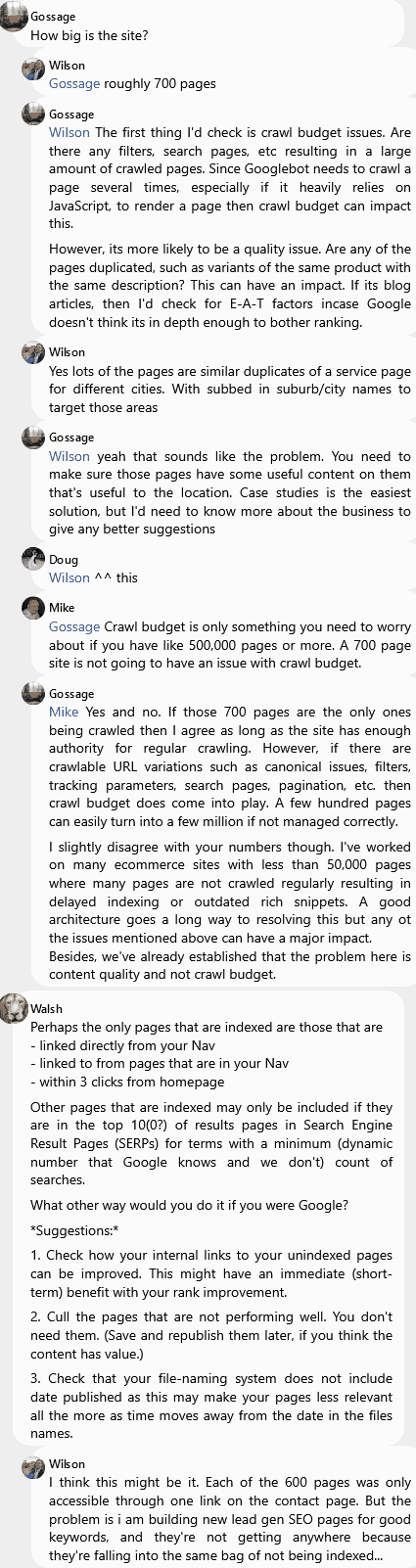

Wilson

Can anyone help me understand why I have a huge proportion of my webpages marked as 'crawled – currently not indexed' or 'Discovered – currently not indexed' on Google Search Console? I am using rank math to automatically upload a sitemap.

📰👈

2 👍🏽2 filtered from 30 💬🗨

How big is the site?

Roughly 700 pages

Gossage » Wilson

The first thing I'd check is crawl budget issues. Are there any filters, search pages, etc resulting in a large amount of crawled pages. Since Googlebot needs to crawl a page several times, especially if it heavily relies on JavaScript, to render a page then crawl budget can impact this.

However, its more likely to be a quality issue. Are any of the pages duplicated, such as variants of the same product with the same description? This can have an impact. If its blog articles, then I'd check for E-A-T factors incase Google doesn't think its in depth enough to bother ranking.

Wilson

Yes lots of the pages are similar duplicates of a service page for different cities. With subbed in suburb/city names to target those areas

Gossage » Wilson

Yeah that sounds like the problem. You need to make sure those pages have some useful content on them that's useful to the location. Case studies is the easiest solution, but I'd need to know more about the business to give any better suggestions

Doug » Wilson

This

Mike » Gossage

Crawl budget is only something you need to worry about if you have like 500,000 pages or more. A 700 page site is not going to have an issue with crawl budget.

Gossage » Mike

Yes and no. If those 700 pages are the only ones being crawled then I agree as long as the site has enough authority for regular crawling. However, if there are crawlable URL variations such as canonical issues, filters, tracking parameters, search pages, pagination, etc. then crawl budget does come into play. A few hundred pages can easily turn into a few million if not managed correctly.

I slightly disagree with your numbers though. I've worked on many ecommerce sites with less than 50,000 pages where many pages are not crawled regularly resulting in delayed indexing or outdated rich snippets. A good architecture goes a long way to resolving this but any ot the issues mentioned above can have a major impact.

Besides, we've already established that the problem here is content quality and not crawl budget.

Walsh

Perhaps the only pages that are indexed are those that are

– linked directly from your Nav

– linked to from pages that are in your Nav

– within 3 clicks from homepage

Other pages that are indexed may only be included if they are in the top 10(0?) of results pages in Search Engine Result Pages (SERPs) for terms with a minimum (dynamic number that Google knows and we don't) count of searches.

What other way would you do it if you were Google?

*Suggestions:*

1. Check how your internal links to your unindexed pages can be improved. This might have an immediate (short-term) benefit with your rank improvement.

2. Cull the pages that are not performing well. You don't need them. (Save and republish them later, if you think the content has value.)

3. Check that your file-naming system does not include date published as this may make your pages less relevant all the more as time moves away from the date in the files names.

I think this might be it. Each of the 600 pages was only accessible through one link on the contact page. But the problem is i am building new lead gen SEO pages for good keywords, and they're not getting anywhere because they're falling into the same bag of not being indexed…

📰👈

Carolyn

Super normal right now. There is a lag between crawling, rendering and indexing in primary index. Google is behind in processing so don't waste a lot of time sweating it. Its not you.

💟1

Thanks but I've had these pages up since Dec last year?

Kathy » Wilson

Same thing happened to me. I then started requesting indexing manually on my category pages. At the same time I made it easier for Google to find and index the pages. After about 4 months it finally kicked in and started indexing the individual blogs slowly but surely.

Carolyn

Yeah, it's taking a long time. AND I've noticed somedays there has been a rollback of sorts – I publish a lot of SEO tests and things are fine one day and the next day every single test page is out – and then 3 days later they are back in and sailing along – so as long as you're creating a page that has some internal links on your site – republishing your sitemap in GSC and manually requesting index on those pages that is all you can do I'm afraid – G takes it own time and it's a LONG time right now.

Kathy

Could be normal but I've had the same problem with rankmath, and never had it until I switched from Yoast. Recently I reported problems to Rankmath and they just came out with a fix. In that case it was marking category pages as no index even though my settings were correct. Make sure it's not something like that. And make sure you have updated version.

Biron

Could be many reasons:

– server capacity and response time <— super important and makes a big difference in my experience

– link depth/internal interlinking to important pages, site nav structure <— second in importance I'd say

– page/site quality (fast load times, mobile friendly, useful content <— Google has said that those specific exclusion types can be due to quality issues

– errors in sitemap, or other site errors. Check coverage and crawl stats report in search console settings to see if your server/site is healthy

– check out Google's SEO starter guides and make sure your on-page SEO is optimized as well. Inspect pages in Google Search Console (GSC) to see if Google can access all important resources and can see the page content properly

– I haven't used RankMath before so not sure how it works, but make sure lastMod is set properly. You can inspect the sitemap file to see when it was last crawled. Don't change the sitemap URL each time if that's something you're doing. Also make sure date stamps are properly updating on-page.

– is your robots.txt file set up correctly? Include the sitemap URL there as well.

Also, live inspect some pages and see if they actually haven't been indexed, Search Console is delayed and it happens to me sometimes where the pages were crawled after the last time the report was updated.

I've started sites from scratch before and had no indexing issues even on day 1, make sure you're abiding by Google's technical and content guidelines, requirements, and recommendations as much as possible.

📰👈

These may satisfy you:

» Advice for Google takes too Long to Index my Pages Explored but not Indexed

» Two Traffic Graphs from GSC and GA that I created a Website to promote some failing items I have on Amazon